总有不规则的流需要我们处理

什么是SPS和PPS

H.264的SPS和PPS串,包含了初始化H.264解码器所需要的信息参数,包括编码所用的profile,level,图像的宽和高,deblock滤波器等。

版权声明:本文为博主原创文章,转载请注明出处:http://blog.jerkybible.com/2016/09/03/使用FFMPEG读取视频流中的SPS和PPS/

访问原文「使用FFMPEG读取视频流中的SPS和PPS」

正常的SPS和PPS在哪里

首先SPS和PPS包含在FLV的AVCDecoderConfigurationRecord结构中,而AVCDecoderConfigurationRecord就是经FFMPEG分析后,AVCodecContext里面的extradata,先列一下这个结构吧。

|

|

FFMPEG获取相应数据的代码类似于下面。代码中的m_formatCtx为AVFormatContext实例。

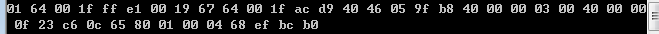

打印结果例如下面。

根据AVCDecoderConfigurationRecord结构:

configurationVersion为第1字节,即0x01;AVCProfileIndication为第2字节,即SPS[1];profile_compatibility为第3字节,即SPS[2];profile_compatibility为第4字节,即SPS[3];lengthSizeMinusOne为第5字节的后2个bit,是NALUnitLength的长度-1,一般为3;numOfSequenceParameterSets为第6字节的后5个bit,是SPS的个数,即1;sequenceParameterSetLength为第7、8字节,是SPS的长度,即0x0019;sequenceParameterSetNALUnits为接下来的25个字节,是SPS的内容,即:10x6764 0x001f 0xacd9 0x4046 0x059f 0xb840 0x0000 0x0300 0x4000 0x000f 0x23c6 0x0c65 0x80numOfPictureParameterSets为接下来的1字节,是PPS的个数,即0x01;pictureParameterSetLength为接下来的2字节,是PPS的长度,即0x0004;pictureParameterSetNALUnit为最后的4字节,是PPS的内容,即:10x68ef 0xbcb0

不正常的SPS和PPS

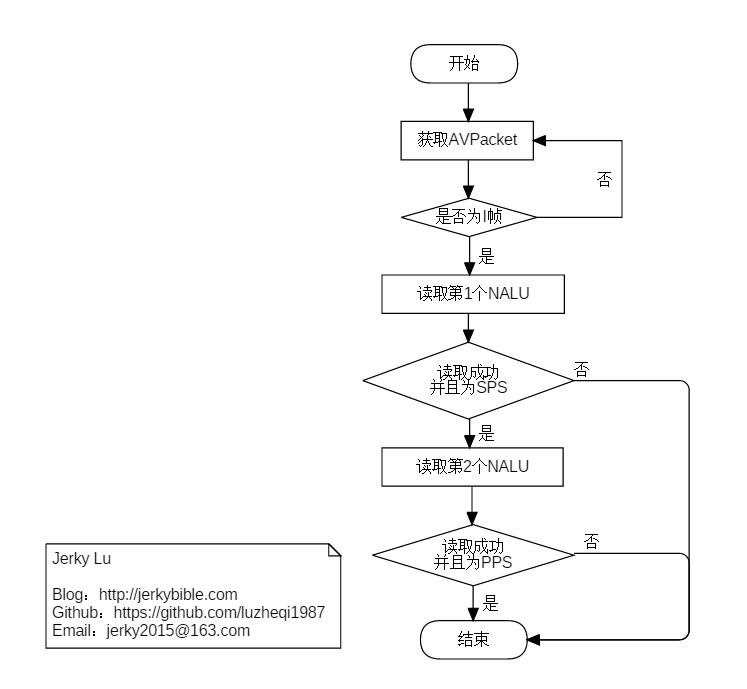

最近在做的一个事儿,RTSP协议获取SPS和PPS,但是不知道是流的问题还是FFMPEG的问题,读取rtsp的时候extradata中始终为NULL,后来通过分析AVPacket发现,AVPacket中的data,不是通常理解中的前2字节为包长度,而是以’0000 0001’出现的,这明显是NALU的格式啊,而且I帧的前面明显包含了SPS和PPS,这和我平常理解的AVPacket只包含图像数据完全不一样。接着参考雷霄骅的最简单的基于librtmp的示例:发布H.264(H.264通过RTMP发布),大致流程如下:

首先获取第1个NALU,在这里我们用作读取SPS,的代码如下:

|

|

然后读取下一个NALU,在这里我们用作读取PPS,代码如下:

这样我们就读取了SPS和PPS的数据,当然最后要对获取的NALU判断一下是否为SPS或者PPS,SPS和PPS对应的NALU类型分别为0x07和0x08。

总结一下

单独读取的SPS和PPS可以用在不重新编码的推流代码中,但是这段推流代码用FFMPEG不会写,因为目前还不知道FFMPEG怎么使用这些单独获取出来的SPS和PPS,但是可以明确的是直接使用RTMP转推的话问题不大。